https://en.wikipedia.org/wiki/Logic

Branches of philosophy

Theoretical philosophy

Hidden categories:

Categories requiring diffusion

This article is about the study of correct reasoning. For other uses, see Logic (disambiguation) and Logician (disambiguation).

Logic studies valid forms of inference like the modus ponens.

Logic is the study of correct reasoning. It includes both formal and informal logic. Formal logic is the science of deductively valid inferences or of logical truths. It is a formal science investigating how conclusions follow from premises in a topic-neutral way. When used as a countable noun, the term "a logic" refers to a logical formal system that articulates a proof system. Formal logic contrasts with informal logic, which is associated with informal fallacies, critical thinking, and argumentation theory. While there is no general agreement on how formal and informal logic are to be distinguished, one prominent approach associates their difference with whether the studied arguments are expressed in formal or informal languages. Logic plays a central role in multiple fields, such as philosophy, mathematics, computer science, and linguistics.

Logic studies arguments, which consist of a set of premises together with a conclusion. Premises and conclusions are usually understood either as sentences or as propositions and are characterized by their internal structure; complex propositions are made up of simpler propositions linked to each other by propositional connectives like ∧  (and) or →

(and) or →  (if...then). The truth of a proposition usually depends on the denotations of its constituents. Logically true propositions constitute a special case, since their truth depends only on the logical vocabulary used in them and not on the denotations of other terms.

(if...then). The truth of a proposition usually depends on the denotations of its constituents. Logically true propositions constitute a special case, since their truth depends only on the logical vocabulary used in them and not on the denotations of other terms.

Arguments can be either correct or incorrect. An argument is correct if its premises support its conclusion. The strongest form of support is found in deductive arguments: it is impossible for their premises to be true and their conclusion to be false. Deductive arguments contrast with ampliative arguments, which may arrive in their conclusion at new information that is not present in the premises. However, it is possible for all their premises to be true while their conclusion is still false. Many arguments found in everyday discourse and the sciences are ampliative arguments, sometimes divided into inductive and abductive arguments. Inductive arguments usually take the form of statistical generalizations, while abductive arguments are inferences to the best explanation. Arguments that fall short of the standards of correct reasoning are called fallacies.

Systems of logic are theoretical frameworks for assessing the correctness of reasoning and arguments. Logic has been studied since antiquity; early approaches include Aristotelian logic, Stoic logic, Anviksiki, and the Mohists. Modern formal logic has its roots in the work of late 19th-century mathematicians such as Gottlob Frege. While Aristotelian logic focuses on reasoning in the form of syllogisms, in the modern era its traditional dominance was replaced by classical logic, a set of fundamental logical intuitions shared by most logicians. It consists of propositional logic, which only considers the logical relations on the level of propositions, and first-order logic, which also articulates the internal structure of propositions using various linguistic devices, such as predicates and quantifiers. Extended logics accept the basic intuitions behind classical logic and extend it to other fields, such as metaphysics, ethics, and epistemology. Deviant logics, on the other hand, reject certain classical intuitions and provide alternative accounts of the fundamental laws of logic.

Definition

The word "logic" originates from the Greek word "logos", which has a variety of translations, such as reason, discourse, or language.[1][2] Logic is traditionally defined as the study of the laws of thought or correct reasoning,[3] and is usually understood in terms of inferences or arguments. Reasoning may be seen as the activity of drawing inferences whose outward expression is given in arguments.[3][4][5] An inference or an argument is a set of premises together with a conclusion. Logic is interested in whether arguments are good or inferences are valid, i.e. whether the premises support their conclusions.[6][7][8] These general characterizations apply to logic in the widest sense, since they are true both for formal and informal logic, but many definitions of logic focus on the more paradigmatic formal logic. In this narrower sense, logic is a formal science that studies how conclusions follow from premises in a topic-neutral way.[9][10][11] In this regard, logic is sometimes contrasted with the theory of rationality, which is wider since it covers all forms of good reasoning.

Alfred Tarski was an influential defender of the idea that logical truth can be defined in terms of possible interpretations.[13][14]

As a formal science, logic contrasts with both the natural and social sciences in that it tries to characterize the inferential relations between premises and conclusions based on their structure alone.[15][16] This means that the actual content of these propositions, i.e. their specific topic, is not important for whether the inference is valid or not.[9][10] Valid inferences are characterized by the fact that the truth of their premises ensures the truth of their conclusion: it is impossible for the premises to be true and the conclusion to be false.[8][17] The general logical structures characterizing valid inferences are called rules of inference.[6] In this sense, logic is often defined as the study of valid arguments.[4] This contrasts with another prominent characterization of logic as the science of logical truths.[18] A proposition is logically true if its truth depends only on the logical vocabulary used in it. This means that it is true in all possible worlds and under all interpretations of its non-logical terms.[19] These two characterizations of logic are closely related to each other: an inference is valid if the material conditional from its premises to its conclusion is logically true.[18]

The term "logic" can also be used in a slightly different sense as a countable noun. In this sense, a logic is a logical formal system. Distinct logics differ from each other concerning the rules of inference they accept as valid and concerning the formal languages used to express them.[4][20][21] Starting in the late 19th century, many new formal systems have been proposed. There are various disagreements concerning what makes a formal system a logic.[4][21] For example, it has been suggested that only logically complete systems qualify as logics. For such reasons, some theorists deny that higher-order logics and fuzzy logic are logics in the strict sense.[4][22][23]

Formal and informal logic

Logic encompasses both formal and informal logic.[4][24] Formal logic is the traditionally dominant field,[17] but applying its insights to actual everyday arguments has prompted modern developments of informal logic,[24][25][26] which considers problems that formal logic on its own is unable to address.[17][26] Both provide criteria for assessing the correctness of arguments and distinguishing them from fallacies.[11][17] Various suggestions have been made concerning how to draw the distinction between the two, but there is no universally accepted answer.[26][27]

Formal logic needs to translate natural language arguments into a formal language, like first-order logic, in order to assess whether they are valid. In this example, the colors indicate how the English words correspond to the symbols.

The most literal approach sees the terms "formal" and "informal" as applying to the language used to express arguments.[24][25][28] On this view, formal logic studies arguments expressed in formal languages while informal logic studies arguments expressed in informal or natural languages.[17][26] This means that the inference from the formulas " P  " and " Q

" and " Q  " to the conclusion " P ∧ Q

" to the conclusion " P ∧ Q  " is studied by formal logic. The inference from the English sentences "Al lit a cigarette" and "Bill stormed out of the room" to the sentence "Al lit a cigarette and Bill stormed out of the room", on the other hand, belongs to informal logic. Formal languages are characterized by their precision and simplicity.[28][17][26] They normally contain a very limited vocabulary and exact rules on how their symbols can be used to construct sentences, usually referred to as well-formed formulas.[29][30] This simplicity and exactness of formal logic make it capable of formulating precise rules of inference that determine whether a given argument is valid.[29] This approach brings with it the need to translate natural language arguments into the formal language before their validity can be assessed, a procedure that comes with various problems of its own.[31][15][26] Informal logic avoids some of these problems by analyzing natural language arguments in their original form without the need of translation.[11][24][32] But it faces problems associated with the ambiguity, vagueness, and context-dependence of natural language expressions.[17][33][34] A closely related approach applies the terms "formal" and "informal" not just to the language used, but more generally to the standards, criteria, and procedures of argumentation.[35]

" is studied by formal logic. The inference from the English sentences "Al lit a cigarette" and "Bill stormed out of the room" to the sentence "Al lit a cigarette and Bill stormed out of the room", on the other hand, belongs to informal logic. Formal languages are characterized by their precision and simplicity.[28][17][26] They normally contain a very limited vocabulary and exact rules on how their symbols can be used to construct sentences, usually referred to as well-formed formulas.[29][30] This simplicity and exactness of formal logic make it capable of formulating precise rules of inference that determine whether a given argument is valid.[29] This approach brings with it the need to translate natural language arguments into the formal language before their validity can be assessed, a procedure that comes with various problems of its own.[31][15][26] Informal logic avoids some of these problems by analyzing natural language arguments in their original form without the need of translation.[11][24][32] But it faces problems associated with the ambiguity, vagueness, and context-dependence of natural language expressions.[17][33][34] A closely related approach applies the terms "formal" and "informal" not just to the language used, but more generally to the standards, criteria, and procedures of argumentation.[35]

Another approach draws the distinction according to the different types of inferences analyzed.[24][36][37] This perspective understands formal logic as the study of deductive inferences in contrast to informal logic as the study of non-deductive inferences, like inductive or abductive inferences.[24][37] The characteristic of deductive inferences is that the truth of their premises ensures the truth of their conclusion. This means that if all the premises are true, it is impossible for the conclusion to be false.[8][17] For this reason, deductive inferences are in a sense trivial or uninteresting since they do not provide the thinker with any new information not already found in the premises.[38][39] Non-deductive inferences, on the other hand, are ampliative: they help the thinker learn something above and beyond what is already stated in the premises. They achieve this at the cost of certainty: even if all premises are true, the conclusion of an ampliative argument may still be false.[18][40][41]

One more approach tries to link the difference between formal and informal logic to the distinction between formal and informal fallacies.[24][26][35] This distinction is often drawn in relation to the form, content, and context of arguments. In the case of formal fallacies, the error is found on the level of the argument's form, whereas for informal fallacies, the content and context of the argument are responsible.[42][43][44] Formal logic abstracts away from the argument's content and is only interested in its form, specifically whether it follows a valid rule of inference.[9][10] In this regard, it is not important for the validity of a formal argument whether its premises are true or false. Informal logic, on the other hand, also takes the content and context of an argument into consideration.[17][26][28] A false dilemma, for example, involves an error of content by excluding viable options, as in "you are either with us or against us; you are not with us; therefore, you are against us".[45][43] For the strawman fallacy, on the other hand, the error is found on the level of context: a weak position is first described and then defeated, even though the opponent does not hold this position. But in another context, against an opponent that actually defends the strawman position, the argument is correct.[33][43]

Other accounts draw the distinction based on investigating general forms of arguments in contrast to particular instances, or on the study of logical constants instead of substantive concepts. A further approach focuses on the discussion of logical topics with or without formal devices, or on the role of epistemology for the assessment of arguments.[17][26]

Fundamental concepts

Premises, conclusions, and truth

Premises and conclusions

Main article: Premise

Premises and conclusions are the basic parts of inferences or arguments and therefore play a central role in logic. In the case of a valid inference or a correct argument, the conclusion follows from the premises, or in other words, the premises support the conclusion.[7][46] For instance, the premises "Mars is red" and "Mars is a planet" support the conclusion "Mars is a red planet". For most types of logic, it is accepted that premises and conclusions have to be truth-bearers.[7][46][i] This means that they have a truth value: they are either true or false. Thus contemporary philosophy generally sees them either as propositions or as sentences.[7] Propositions are the denotations of sentences and are usually understood as abstract objects.[48][49]

Propositional theories of premises and conclusions are often criticized because of the difficulties involved in specifying the identity criteria of abstract objects or because of naturalist considerations.[7] These objections are avoided by seeing premises and conclusions not as propositions but as sentences, i.e. as concrete linguistic objects like the symbols displayed on a page of a book. But this approach comes with new problems of its own: sentences are often context-dependent and ambiguous, meaning an argument's validity would not only depend on its parts but also on its context and on how it is interpreted.[7][50] Another approach is to understand premises and conclusions in psychological terms as thoughts or judgments. This position is known as psychologism and was heavily criticized around the turn of the 20th century.[7][51][52]

Internal structure

Premises and conclusions have internal structure. As propositions or sentences, they can be either simple or complex.[53][54] A complex proposition has other propositions as its constituents, which are linked to each other through propositional connectives like "and" or "if...then". Simple propositions, on the other hand, do not have propositional parts. But they can also be conceived as having an internal structure: they are made up of subpropositional parts, like singular terms and predicates.[46][53][54] For example, the simple proposition "Mars is red" can be formed by applying the predicate "red" to the singular term "Mars". In contrast, the complex proposition "Mars is red and Venus is white" is made up of two simple propositions connected by the propositional connective "and".[46]

Whether a proposition is true depends, at least in part, on its constituents.[54] For complex propositions formed using truth-functional propositional connectives, their truth only depends on the truth values of their parts.[46] But this relation is more complicated in the case of simple propositions and their subpropositional parts. These subpropositional parts have meanings of their own, like referring to objects or classes of objects.[46][55][56] Whether the simple proposition they form is true depends on their relation to reality, i.e. what the objects they refer to are like. This topic is studied by theories of reference.[56]

Logical truth

Main article: Logical truth

In some cases, a simple or a complex proposition is true independently of the substantive meanings of its parts.[3][57] For example, the complex proposition "if Mars is red, then Mars is red" is true independent of whether its parts, i.e. the simple proposition "Mars is red", are true or false. In such cases, the truth is called a logical truth: a proposition is logically true if its truth depends only on the logical vocabulary used in it.[19][57][46] This means that it is true under all interpretations of its non-logical terms. In some modal logics, this notion can be understood equivalently as truth at all possible worlds.[19][58] Some theorists define logic as the study of logical truths.[18]

Truth tables

Truth tables can be used to show how logical connectives work or how the truth of complex propositions depends on their parts. They have a column for each input variable. Each row corresponds to one possible combination of the truth values these variables can take. The final columns present the truth values of the corresponding expressions as determined by the input values. For example, the expression " p ∧ q  " uses the logical connective ∧

" uses the logical connective ∧  (and). It could be used to express a sentence like "yesterday was Sunday and the weather was good". It is only true if both of its input variables, p

(and). It could be used to express a sentence like "yesterday was Sunday and the weather was good". It is only true if both of its input variables, p  ("yesterday was Sunday") and q

("yesterday was Sunday") and q  ("the weather was good"), are true. In all other cases, the expression as a whole is false. Other important logical connectives are ∨

("the weather was good"), are true. In all other cases, the expression as a whole is false. Other important logical connectives are ∨  (or), →

(or), →  (if...then), and ¬

(if...then), and ¬  (not).[59][60] Truth tables can also be defined for more complex expressions that use several propositional connectives. For example, given the conditional proposition p → q

(not).[59][60] Truth tables can also be defined for more complex expressions that use several propositional connectives. For example, given the conditional proposition p → q  , one can form truth tables of its inverse ( ¬ p → ¬ q

, one can form truth tables of its inverse ( ¬ p → ¬ q  ), and its contraposition ( ¬ q → ¬ p

), and its contraposition ( ¬ q → ¬ p  ).[61]

).[61]

Truth table of various expression

| p | q | p ∧ q | p ∨ q | p → q | ¬p → ¬q |

|---|

| T |

T |

T |

T |

T |

T |

| T |

F |

F |

T |

F |

T |

| F |

T |

F |

T |

T |

F |

| F |

F |

F |

F |

T |

T |

Arguments and inferences

Main articles: Argument and inference

Logic is commonly defined in terms of arguments or inferences as the study of their correctness.[3][7] An argument is a set of premises together with a conclusion.[62][63] An inference is the process of reasoning from these premises to the conclusion.[7] But these terms are often used interchangeably in logic. Arguments are correct or incorrect depending on whether their premises support their conclusion. Premises and conclusions, on the other hand, are true or false depending on whether they are in accord with reality. In formal logic, a sound argument is an argument that is both correct and has only true premises.[64] Sometimes a distinction is made between simple and complex arguments. A complex argument is made up of a chain of simple arguments. These simple arguments constitute a chain because the conclusions of the earlier arguments are used as premises in the later arguments. For a complex argument to be successful, each link of the chain has to be successful.[7]

Argument terminology used in logic

Arguments and inferences are either are correct or incorrect. If they are correct then their premises support their conclusion. In the incorrect case, this support is missing. It can take different forms corresponding to the different types of reasoning.[65][40][66] The strongest form of support corresponds to deductive reasoning. But even arguments that are not deductively valid may still constitute good arguments because their premises offer non-deductive support to their conclusions. For such cases, the term ampliative or inductive reasoning is used.[18][40][66] Deductive arguments are associated with formal logic in contrast to the relation between ampliative arguments and informal logic.[24][36][67]

Deductive

A deductively valid argument is one whose premises guarantee the truth of its conclusion.[8][17] For instance, the argument "(1) all frogs are reptiles; (2) no cats are reptiles; (3) therefore no cats are frogs" is deductively valid. For deductive validity, it does not matter whether the premises or the conclusion are actually true. So the argument "(1) all frogs are mammals; (2) no cats are mammals; (3) therefore no cats are frogs" is also valid because the conclusion follows necessarily from the premises.[68]

Alfred Tarski holds that deductive arguments have three essential features: (1) they are formal, i.e. they depend only on the form of the premises and the conclusion; (2) they are a priori, i.e. no sense experience is needed to determine whether they obtain; (3) they are modal, i.e. that they hold by logical necessity for the given propositions, independent of any other circumstances.[8]

Because of the first feature, the focus on formality, deductive inference is usually identified with rules of inference.[69] Rules of inference specify how the premises and the conclusion have to be structured for the inference to be valid. Arguments that do not follow any rule of inference are deductively invalid.[70][71] The modus ponens is a prominent rule of inference. It has the form "p; if p, then q; therefore q".[71] Knowing that it has just rained ( p  ) and that after rain the streets are wet ( p → q

) and that after rain the streets are wet ( p → q  ), one can use modus ponens to deduce that the streets are wet ( q

), one can use modus ponens to deduce that the streets are wet ( q  ).[72]

).[72]

The third feature can be expressed by stating that deductively valid inferences are truth-preserving: it is impossible for the premises to be true and the conclusion to be false.[6][40][73] Because of this feature, it is often asserted that deductive inferences are uninformative since the conclusion cannot arrive at new information not already present in the premises.[38][39] But this point is not always accepted since it would mean, for example, that most of mathematics is uninformative. A different characterization distinguishes between surface and depth information. On this view, deductive inferences are uninformative on the depth level but can be highly informative on the surface level, as may be the case for various mathematical proofs.[38][74][75]

Ampliative

Ampliative inferences, on the other hand, are informative even on the depth level. They are more interesting in this sense since the thinker may acquire substantive information from them and thereby learn something genuinely new.[76][40][41] But this feature comes with a certain cost: the premises support the conclusion in the sense that they make its truth more likely but they do not ensure its truth.[6][40][41] This means that the conclusion of an ampliative argument may be false even though all its premises are true. This characteristic is closely related to non-monotonicity and defeasibility: it may be necessary to retract an earlier conclusion upon receiving new information or in the light of new inferences drawn.[3][77][73] Ampliative reasoning plays a central role for many arguments found in everyday discourse and the sciences. Ampliative arguments are not automatically incorrect. Instead, they just follow different standards of correctness. The support they provide for their conclusion usually comes in degrees. This means that strong ampliative arguments make their conclusion very likely while weak ones are less certain. As a consequence, the line between correct and incorrect arguments is blurry in some cases, as when the premises offer weak but non-negligible support. This contrasts with deductive arguments, which are either valid or invalid with nothing in-between.[66][73][78]

The terminology used to categorize ampliative arguments is inconsistent. Some authors, like James Hawthorne, use the term "induction" to cover all forms of non-deductive arguments.[66][78][79] But in a more narrow sense, induction is only one type of ampliative argument besides abductive arguments.[73] Some philosophers, Leo Groarke, also allow conductive arguments as one more type.[24][80] In this narrow sense, induction is often defined as a form of statistical generalization.[81][82] In this case, the premises of an inductive argument are many individual observations that all show a certain pattern. The conclusion then is a general law that this pattern always obtains.[83] In this sense, one may infer that "all elephants are gray" based on one's past observations of the color of elephants.[73] A closely related form of inductive inference has as its conclusion not a general law but one more specific instance, as when it is inferred that an elephant one has not seen yet is also gray.[83] Some theorists, like Igor Douven, stipulate that inductive inferences rest only on statistical considerations in order to distinguish them from abductive inference.[73]

Abductive inference may or may not take statistical observations into consideration. In either case, the premises offer support for the conclusion because the conclusion is the best explanation of why the premises are true.[73][84] In this sense, abduction is also called the inference to the best explanation.[85] For example, given the premise that there is a plate with breadcrumbs in the kitchen in the early morning, one may infer the conclusion that one's house-mate had a midnight snack and was too tired to clean the table. This conclusion is justified because it is the best explanation of the current state of the kitchen.[73] For abduction, it is not sufficient that the conclusion explains the premises. For example, the conclusion that a burglar broke into the house last night, got hungry on the job, and had a midnight snack, would also explain the state of the kitchen. But this conclusion is not justified because it is not the best or most likely explanation.[73][84][85]

Fallacies

Not all arguments live up to the standards of correct reasoning. When they do not, they are usually referred to as fallacies. Their central aspect is not that their conclusion is false but that there is some flaw with the reasoning leading to this conclusion.[86][87] So the argument "it is sunny today; therefore spiders have eight legs" is fallacious even though the conclusion is true. Some theorists, like John Stuart Mill, give a more restrictive definition of fallacies by additionally requiring that they appear to be correct.[33][86] This way, genuine fallacies can be distinguished from mere mistakes of reasoning due to carelessness. This explains why people tend to commit fallacies: because they have an alluring element that seduces people into committing and accepting them.[86] However, this reference to appearances is controversial because it belongs to the field of psychology, not logic, and because appearances may be different for different people.[86][88]

Young America's dilemma: Shall I be wise and great, or rich and powerful? (poster from 1901) This is an example of a false Dilemma: an informal fallacy using a disjunctive premise that excludes viable alternatives.

Fallacies are usually divided into formal and informal fallacies.[42][43][44] For formal fallacies, the source of the error is found in the form of the argument. For example, denying the antecedent is one type of formal fallacy, as in "if Othello is a bachelor, then he is male; Othello is not a bachelor; therefore Othello is not male".[89][90] But most fallacies fall into the category of informal fallacies, of which a great variety is discussed in the academic literature. The source of their error is usually found in the content or the context of the argument.[33][43][86] Informal fallacies are sometimes categorized as fallacies of ambiguity, fallacies of presumption, or fallacies of relevance. For fallacies of ambiguity, the ambiguity and vagueness of natural language are responsible for their flaw, as in "feathers are light; what is light cannot be dark; therefore feathers cannot be dark".[34][91][44] Fallacies of presumption have a wrong or unjustified premise but may be valid otherwise.[44][92] In the case of fallacies of relevance, the premises do not support the conclusion because they are not relevant to it.[44][91]

Definitory and strategic rules

The main focus of most logicians is to investigate the criteria according to which an argument is correct or incorrect. A fallacy is committed if these criteria are violated. In the case of formal logic, they are known as rules of inference.[65] They constitute definitory rules, which determine whether a certain inference is correct or which inferences are allowed. Definitory rules contrast with strategic rules. Strategic rules specify which inferential moves are necessary in order to reach a given conclusion based on a certain set of premises. This distinction does not just apply to logic but also to various games as well. In chess, for example, the definitory rules dictate that bishops may only move diagonally while the strategic rules describe how the allowed moves may be used to win a game, for example, by controlling the center and by defending one's king.[65][93][94]A third type of rules concerns empirical descriptive rules. They belong to the field of psychology and generalize how people actually draw inferences. It has been argued that logicians should give more emphasis to strategic rules since they are highly relevant for effective reasoning.[65]

Formal systems

Main article: Formal system

A formal system of logic consists of a formal language together with a set of axioms and a proof system used to draw inferences from these axioms.[95][96] Some theorists also include a semantics that specifies how the expressions of the formal language relate to real objects.[97][98] The term "a logic" is used as a countable noun to refer to a particular formal system of logic.[4][99][21] Starting in the late 19th century, many new formal systems have been proposed.[4][22][21]

A formal language consists of an alphabet and syntactic rules. The alphabet is the set of basic symbols used in expressions. The syntactic rules determine how these symbols may be arranged to result in well-formed formulas.[100][101] For instance, the syntactic rules of propositional logic determine that " P ∧ Q  " is a well-formed formula but " ∧ Q

" is a well-formed formula but " ∧ Q  " is not since the logical conjunction ∧

" is not since the logical conjunction ∧  requires terms on both sides.[102]

requires terms on both sides.[102]

A proof system is a collection of rules that may be used to formulate formal proofs. In this regard, it may be understood as an inference machine that arrives at conclusions from a set of axioms. Rules in a proof system are defined in terms of the syntactic form of formulas independent of their specific content. For instance, the classical rule of conjunction introduction states that P ∧ Q  follows from the premises P

follows from the premises P  and Q

and Q  . Such rules can be applied sequentially, giving a mechanical procedure for generating conclusions from premises. There are several different types of proof systems including natural deduction and sequent calculi.[103][104]

. Such rules can be applied sequentially, giving a mechanical procedure for generating conclusions from premises. There are several different types of proof systems including natural deduction and sequent calculi.[103][104]

A semantics is a system for mapping expressions of a formal language to their denotations. In many systems of logic, denotations are truth values. For instance, the semantics for classical propositional logic assigns the formula P ∧ Q  the denotation "true" whenever P

the denotation "true" whenever P  and Q

and Q  are true. From the semantic point of view, a premise entails a conclusion if the conclusion is true whenever the premise is true.[105][106][107]

are true. From the semantic point of view, a premise entails a conclusion if the conclusion is true whenever the premise is true.[105][106][107]

A system of logic is sound when its proof system cannot derive a conclusion from a set of premises unless it is semantically entailed by them. In other words, its proof system cannot lead to false conclusions, as defined by the semantics. A system is complete when its proof system can derive every conclusion that is semantically entailed by its premises. In other words, its proof system can lead to any true conclusion, as defined by the semantics. Thus, soundness and completeness together describe a system whose notions of validity and entailment line up perfectly.[108][109][110]

Systems of logic

Systems of logic are theoretical frameworks for assessing the correctness of reasoning and arguments. For over two thousand years, Aristotelian logic was treated as the canon of logic in the Western world,[21][111][112] but modern developments in this field have led to a vast proliferation of logical systems.[113] One prominent categorization divides modern formal logical systems into classical logic, extended logics, and deviant logics.[4][113][114] Classical logic is to be distinguished from traditional or Aristotelian logic. It encompasses propositional logic and first-order logic. It is "classical" in the sense that it is based on various fundamental logical intuitions shared by most logicians.[3][115][116] These intuitions include the law of excluded middle, the double negation elimination, the principle of explosion, and the bivalence of truth.[117] It was originally developed to analyze mathematical arguments and was only later applied to other fields as well. Because of this focus on mathematics, it does not include logical vocabulary relevant to many other topics of philosophical importance, like the distinction between necessity and possibility, the problem of ethical obligation and permission, or the relations between past, present, and future.[118] Such issues are addressed by extended logics. They build on the fundamental intuitions of classical logic and expand it by introducing new logical vocabulary. This way, the exact logical approach is applied to fields like ethics or epistemology that lie beyond the scope of mathematics.[21][119][120]

Deviant logics, on the other hand, reject some of the fundamental intuitions of classical logic. Because of this, they are usually seen not as its supplements but as its rivals. Deviant logical systems differ from each other either because they reject different classical intuitions or because they propose different alternatives to the same issue.[113][114]

Informal logic is usually carried out in a less systematic way. It often focuses on more specific issues, like investigating a particular type of fallacy or studying a certain aspect of argumentation.[24] Nonetheless, some systems of informal logic have also been presented that try to provide a systematic characterization of the correctness of arguments.[86][121][122]

Aristotelian

Aristotelian logic encompasses a great variety of topics, including metaphysical theses about ontological categories and problems of scientific explanation. But in a more narrow sense, it is identical to term logic or syllogistics. A syllogism is a certain form of argument involving three propositions: two premises and a conclusion. Each proposition has three essential parts: a subject, a predicate, and a copula connecting the subject to the predicate.[111][112][123] For example, the proposition "Socrates is wise" is made up of the subject "Socrates", the predicate "wise", and the copula "is".[112] The subject and the predicate are the terms of the proposition. In this sense, Aristotelian logic does not contain complex propositions made up of various simple propositions. It differs in this aspect from propositional logic, in which any two propositions can be linked using a logical connective like "and" to form a new complex proposition.[111][124]

The square of opposition is often used to visualize the relations between the four basic categorical propositions in Aristotelian logic. It shows, for example, that the propositions "All S are P" and "Some S are not P" are contradictory, meaning that one of them has to be true while the other is false.

Aristotelian logic differs from predicate logic in that the subject is either universal, particular, indefinite, or singular. For example, the term "all humans" is a universal subject in the proposition "all humans are mortal". A similar proposition could be formed by replacing it with the particular term "some humans", the indefinite term "a human", or the singular term "Socrates".[111][123][125] In predicate logic, on the other hand, universal and particular propositions would be expressed by using a quantifier and two predicates.[111][126] Another key difference is that Aristotelian logic only includes predicates for simple properties of entities, but lacks predicates corresponding to relations between entities.[127] The predicate can be linked to the subject in two ways: either by affirming it or by denying it.[111][112] For example, the proposition "Socrates is not a cat" involves the denial of the predicate "cat" to the subject "Socrates". Using different combinations of subjects and predicates, a great variety of propositions and syllogisms can be formed. Syllogisms are characterized by the fact that the premises are linked to each other and to the conclusion by sharing one predicate in each case.[111][128][129] Thus, these three propositions contain three predicates, referred to as major term, minor term, and middle term.[112][128][129] The central aspect of Aristotelian logic involves classifying all possible syllogisms into valid and invalid arguments according to how the propositions are formed.[111][112][128] For example, the syllogism "all men are mortal; Socrates is a man; therefore Socrates is mortal" is valid. The syllogism "all cats are mortal; Socrates is mortal; therefore Socrates is a cat", on the other hand, is invalid.[130]

Classical

Propositional logic

Main article: Propositional calculus

Propositional logic comprises formal systems in which formulae are built from atomic propositions using logical connectives. For instance, propositional logic represents the conjunction of two atomic propositions P  and Q

and Q  as the complex formula P ∧ Q

as the complex formula P ∧ Q  . Unlike predicate logic where terms and predicates are the smallest units, propositional logic takes full propositions with truth values as its most basic component.[131] Thus, propositional logics can only represent logical relationships that arise from the way complex propositions are built from simpler ones; it cannot represent inferences that results from the inner structure of a proposition.[132]

. Unlike predicate logic where terms and predicates are the smallest units, propositional logic takes full propositions with truth values as its most basic component.[131] Thus, propositional logics can only represent logical relationships that arise from the way complex propositions are built from simpler ones; it cannot represent inferences that results from the inner structure of a proposition.[132]

First-order logic

Gottlob Frege's Begriffschrift introduced the notion of quantifier in a graphical notation, which here represents the judgement that ∀ x . F ( x )  is true.

is true.

Main article: First-order logic

First-order logic includes the same propositional connectives as propositional logic but differs from it because it articulates the internal structure of propositions. This happens through devices such as singular terms, which refer to particular objects, predicates, which refer to properties and relations, and quantifiers, which treat notions like "some" and "all".[133][46][56] For example, to express the proposition "this raven is black", one may use the predicate B  for the property "black" and the singular term r

for the property "black" and the singular term r  referring to the raven to form the expression B ( r )

referring to the raven to form the expression B ( r )  . To express that some objects are black, the existential quantifier ∃

. To express that some objects are black, the existential quantifier ∃  is combined with the variable x

is combined with the variable x  to form the proposition ∃ x B ( x )

to form the proposition ∃ x B ( x )  . First-order logic contains various rules of inference that determine how expressions articulated this way can form valid arguments, for example, that one may infer ∃ x B ( x )

. First-order logic contains various rules of inference that determine how expressions articulated this way can form valid arguments, for example, that one may infer ∃ x B ( x )  from B ( r )

from B ( r )  .[134][135]

.[134][135]

Gottlob Frege developed the first fully axiomatic system of first-order logic.[136]

The development of first-order logic is usually attributed to Gottlob Frege.[137][138] The analytical generality of first-order logic allowed the formalization of mathematics, drove the investigation of set theory, and allowed the development of Alfred Tarski's approach to model theory. It provides the foundation of modern mathematical logic.[139][140]

Extended

Modal logic

Main article: Modal logic

Modal logic is an extension of classical logic. In its original form, sometimes called "alethic modal logic", it introduces two new symbols: ◊  expresses that something is possible while ◻

expresses that something is possible while ◻  expresses that something is necessary.[141][142] For example, if the formula B ( s )

expresses that something is necessary.[141][142] For example, if the formula B ( s )  stands for the sentence "Socrates is a banker" then the formula ◊ B ( s )

stands for the sentence "Socrates is a banker" then the formula ◊ B ( s )  articulates the sentence "It is possible that Socrates is a banker".[143] In order to include these symbols in the logical formalism, modal logic introduces new rules of inference that govern what role they play in inferences. One rule of inference states that, if something is necessary, then it is also possible. This means that ◊ A

articulates the sentence "It is possible that Socrates is a banker".[143] In order to include these symbols in the logical formalism, modal logic introduces new rules of inference that govern what role they play in inferences. One rule of inference states that, if something is necessary, then it is also possible. This means that ◊ A  follows from ◻ A

follows from ◻ A  . Another principle states that if a proposition is necessary then its negation is impossible and vice versa. This means that ◻ A

. Another principle states that if a proposition is necessary then its negation is impossible and vice versa. This means that ◻ A  is equivalent to ¬ ◊ ¬ A

is equivalent to ¬ ◊ ¬ A  .[144][145][146]

.[144][145][146]

Other forms of modal logic introduce similar symbols but associate different meanings with them to apply modal logic to other fields. For example, deontic logic concerns the field of ethics and introduces symbols to express the ideas of obligation and permission, i.e. to describe whether an agent has to perform a certain action or is allowed to perform it.[147] The modal operators in temporal modal logic articulate temporal relations. They can be used to express, for example, that something happened at one time or that something is happening all the time.[148] In epistemology, epistemic modal logic is used to represent the ideas of knowing something in contrast to merely believing it to be the case.[149]

Higher order logic

Main article: Higher-order logic

Higher-order logics extend classical logic not by using modal operators but by introducing new forms of quantification.[7][150][151] Quantifiers correspond to terms like "all" or "some". In classical first-order logic, quantifiers are only applied to individuals. The formula " ∃ x ( A p p l e ( x ) ∧ S w e e t ( x ) )  " (some apples are sweet) is an example of the existential quantifier " ∃

" (some apples are sweet) is an example of the existential quantifier " ∃  " applied to the individual variable " x

" applied to the individual variable " x  ". In higher-order logics, quantification is also allowed over predicates. This increases its expressive power. For example, to express the idea that Mary and John share some qualities, one could use the formula " ∃ Q ( Q ( m a r y ) ∧ Q ( j o h n ) )

". In higher-order logics, quantification is also allowed over predicates. This increases its expressive power. For example, to express the idea that Mary and John share some qualities, one could use the formula " ∃ Q ( Q ( m a r y ) ∧ Q ( j o h n ) )  ". In this case, the existential quantifier is applied to the predicate variable " Q

". In this case, the existential quantifier is applied to the predicate variable " Q  ".[7][150][152] The added expressive power is especially useful for mathematics since it allows for more succinct formulations of mathematical theories.[7] But it has various drawbacks in regard to its meta-logical properties and ontological implications, which is why first-order logic is still much more widely used.[7][151]

".[7][150][152] The added expressive power is especially useful for mathematics since it allows for more succinct formulations of mathematical theories.[7] But it has various drawbacks in regard to its meta-logical properties and ontological implications, which is why first-order logic is still much more widely used.[7][151]

Deviant

Intuitionistic logic is a restricted version of classical logic.[153][119] It uses the same symbols but excludes certain rules of inference. For example, according to the law of double negation elimination, if a sentence is not not true, then it is true. This means that A  follows from ¬ ¬ A

follows from ¬ ¬ A  . This is a valid rule of inference in classical logic but it is invalid in intuitionistic logic. Another classical principle not part of intuitionistic logic is the law of excluded middle. It states that for every sentence, either it or its negation is true. This means that every proposition of the form A ∨ ¬ A

. This is a valid rule of inference in classical logic but it is invalid in intuitionistic logic. Another classical principle not part of intuitionistic logic is the law of excluded middle. It states that for every sentence, either it or its negation is true. This means that every proposition of the form A ∨ ¬ A  is true.[154][119] These deviations from classical logic are based on the idea that truth is established by verification using a proof. Intuitionistic logic is especially prominent in the field of constructive mathematics, which emphasizes the need to find or construct a specific example in order to prove its existence.[119][155][156]

is true.[154][119] These deviations from classical logic are based on the idea that truth is established by verification using a proof. Intuitionistic logic is especially prominent in the field of constructive mathematics, which emphasizes the need to find or construct a specific example in order to prove its existence.[119][155][156]

Multi-valued logics depart from classicality by rejecting the principle of bivalence which requires all propositions to be either true or false. For instance, Jan Łukasiewicz and Stephen Cole Kleene both proposed ternary logics which have a third truth value representing that a statement's truth value is indeterminate.[157][158][159] These logics have seen applications including to presupposition in linguistics. Fuzzy logics are multivalued logics that have an infinite number of "degrees of truth", represented by a real number between 0 and 1.[160]

Paraconsistent logics are logical systems that can deal with contradictions. They are formulated to avoid the principle of explosion: for them, it is not the case that anything follows from a contradiction.[119][161][162] They are often motivated by dialetheism, the view that contradictions are real or that reality itself is contradictory. Graham Priest is an influential contemporary proponent of this position and similar views have been ascribed to Georg Wilhelm Friedrich Hegel.[161][162][163]

Informal

The pragmatic or dialogical approach to informal logic sees arguments as speech acts and not merely as a set of premises together with a conclusion.[86][121][122] As speech acts, they occur in a certain context, like a dialogue, which affects the standards of right and wrong arguments.[33][122] A prominent version by Douglas N. Walton understands a dialogue as a game between two players.[86] The initial position of each player is characterized by the propositions to which they are committed and the conclusion they intend to prove. Dialogues are games of persuasion: each player has the goal of convincing the opponent of their own conclusion.[33] This is achieved by making arguments: arguments are the moves of the game.[33][122] They affect to which propositions the players are committed. A winning move is a successful argument that takes the opponent's commitments as premises and shows how one's own conclusion follows from them. This is usually not possible straight away. For this reason, it is normally necessary to formulate a sequence of arguments as intermediary steps, each of which brings the opponent a little closer to one's intended conclusion. Besides these positive arguments leading one closer to victory, there are also negative arguments preventing the opponent's victory by denying their conclusion.[33] Whether an argument is correct depends on whether it promotes the progress of the dialogue. Fallacies, on the other hand, are violations of the standards of proper argumentative rules.[86][88] These standards also depend on the type of dialogue. For example, the standards governing the scientific discourse differ from the standards in business negotiations.[122]

The epistemic approach to informal logic, on the other hand, focuses on the epistemic role of arguments.[86][121] It is based on the idea that arguments aim to increase our knowledge. They achieve this by linking justified beliefs to beliefs that are not yet justified.[164] Correct arguments succeed at expanding knowledge while fallacies are epistemic failures: they do not justify the belief in their conclusion.[86][121] In this sense, logical normativity consists in epistemic success or rationality.[164] For example, the fallacy of begging the question is a fallacy because it fails to provide independent justification for its conclusion, even though it is deductively valid.[91][164] The Bayesian approach is one example of an epistemic approach.[86] Central to Bayesianism is not just whether the agent believes something but the degree to which they believe it, the so-called credence. Degrees of belief are understood as subjective probabilities in the believed proposition, i.e. as how certain the agent is that the proposition is true.[165][166][167] On this view, reasoning can be interpreted as a process of changing one's credences, often in reaction to new incoming information.[86] Correct reasoning, and the arguments it is based on, follows the laws of probability, for example, the principle of conditionalization. Bad or irrational reasoning, on the other hand, violates these laws.[121][166][168]

Areas of research

Logic is studied in various fields. In many cases, this is done by applying its formal method to specific topics outside its scope, like to ethics or computer science.[3][4] In other cases, logic itself is made the subject of research in another discipline. This can happen in diverse ways, like by investigating the philosophical presuppositions of fundamental logical concepts, by interpreting and analyzing logic through mathematical structures, or by studying and comparing abstract properties of formal logical systems.[3][169][170]

Philosophy of logic and philosophical logic

Main articles: Philosophy of logic and Philosophical logic

Philosophy of logic is the philosophical discipline studying the scope and nature of logic.[3][7] It investigates many presuppositions implicit in logic, like how to define its fundamental concepts or the metaphysical assumptions associated with them.[21] It is also concerned with how to classify the different logical systems and considers the ontological commitments they incur.[3] Philosophical logic is one of the areas within the philosophy of logic. It studies the application of logical methods to philosophical problems in fields like metaphysics, ethics, and epistemology.[21][118] This application usually happens in the form of extended or deviant logical systems.[120][22]

Metalogic

Main article: Metalogic

Metalogic is the field of inquiry studying the properties of formal logical systems. For example, when a new formal system is developed, metalogicians may investigate it to determine which formulas can be proven in it, whether an algorithm could be developed to find a proof for each formula, whether every provable formula in it is a tautology, and how it compares to other logical systems. A key issue in metalogic concerns the relation between syntax and semantics. The syntactic rules of a formal system determine how to deduce conclusions from premises, i.e. how to formulate proofs. The semantics of a formal system governs which sentences are true and which ones are false. This determines the validity of arguments since, for valid arguments, it is impossible for the premises to be true and the conclusion to be false. The relation between syntax and semantics concerns issues like whether every valid argument is provable and whether every provable argument is valid. Other metalogical properties investigated include completeness, soundness, consistency, decidability, and expressive power. Metalogicians usually rely heavily on abstract mathematical reasoning when investigating and formulating metalogical proofs in order to arrive at precise and general conclusions on these topics.[171][172][173]

Mathematical logic

Main article: Mathematical logic

Bertrand Russell made various significant contributions to mathematical logic.[174]

Mathematical logic is the study of logic within mathematics. Major subareas include model theory, proof theory, set theory, and computability theory.[175][176] Research in mathematical logic commonly addresses the mathematical properties of formal systems of logic. However, it can also include attempts to use logic to analyze mathematical reasoning or to establish logic-based foundations of mathematics.[177] The latter was a major concern in early 20th century mathematical logic, which pursued the program of logicism pioneered by philosopher-logicians such as Gottlob Frege, Alfred North Whitehead and Bertrand Russell. Mathematical theories were supposed to be logical tautologies, and the programme was to show this by means of a reduction of mathematics to logic. The various attempts to carry this out met with failure, from the crippling of Frege's project in his Grundgesetze by Russell's paradox, to the defeat of Hilbert's program by Gödel's incompleteness theorems.[178][179][180]

Set theory originated in the study of the infinite by Georg Cantor, and it has been the source of many of the most challenging and important issues in mathematical logic. They include Cantor's theorem, the status of the Axiom of Choice, the question of the independence of the continuum hypothesis, and the modern debate on large cardinal axioms.[181][182]

Computability theory is the branch of mathematical logic that investigates effective procedures to solve calculation problems. An example is the problem of finding a mechanical procedure that can decide for any positive integer whether it is a prime number. One of its main goals is to understand whether it is possible to solve a given problem using an algorithm. Computability theory uses various theoretical tools and models, such as Turing machines, to explore this issue.[183][184]

Computational logic

Main articles: Computational logic and Logic in computer science

Conjunction (AND) is one of the basic operations of boolean logic. It can be electronically implemented in several ways, for example, by using two transistors.

Computational logic is the branch of logic and computer science that studies how to implement mathematical reasoning and logical formalisms using computers. This includes, for example, automatic theorem provers, which employ rules of inference to construct a proof step by step from a set of premises to the intended conclusion without human intervention.[185][186][187] Logic programming languages are designed specifically to express facts using logical formulas and to draw inferences from these facts. For example, Prolog is a logic programming language based on predicate logic.[188][189] Computer scientists also apply concepts from logic to problems in computing. The works of Claude Shannon were influential in this regard. He showed how Boolean logic can be used to understand and implement computer circuits.[190][191] This can be achieved using electronic logic gates, i.e. electronic circuits with one or more inputs and usually one output. The truth values of propositions are represented by different voltage levels. This way, logic functions can be simulated by applying the corresponding voltages to the inputs of the circuit and determining the value of the function by measuring the voltage of the output.[192]

Formal semantics of natural language

Main article: Formal semantics (natural language)

Formal semantics is a subfield of logic, linguistics, and the philosophy of language. The discipline of semantics studies the meaning of language. Formal semantics uses formal tools from the fields of symbolic logic and mathematics to give precise theories of the meaning of natural language expressions. It understands meaning usually in relation to truth conditions, i.e. it investigates in which situations a sentence would be true or false. One of its central methodological assumptions is the principle of compositionality, which states that the meaning of a complex expression is determined by the meanings of its parts and how they are combined. For example, the meaning of the verb phrase "walk and sing" depends on the meanings of the individual expressions "walk" and "sing". Many theories in formal semantics rely on model theory. This means that they employ set theory to construct a model and then interpret the meanings of expression in relation to the elements in this model. For example, the term "walk" may be interpreted as the set of all individuals in the model that share the property of walking. Early influential theorists in this field were Richard Montague and Barbara Partee, who focused their analysis on the English language.[193]

Epistemology of logic

The epistemology of logic investigates how one knows that an argument is valid or that a proposition is logically true.[194][195] This includes questions like how to justify that modus ponens is a valid rule of inference or that contradictions are false.[194] The traditionally dominant view is that this form of logical understanding belongs to knowledge a priori.[195] In this regard, it is often argued that the mind has a special faculty to examine relations between pure ideas and that this faculty is also responsible for apprehending logical truths.[196] A similar approach understands the rules of logic in terms of linguistic conventions. On this view, the laws of logic are trivial since they are true by definition: they just express the meanings of the logical vocabulary.[194][196][197]

Some theorists, like Hilary Putnam and Penelope Maddy, have objected to the view that logic is knowable a priori and hold instead that logical truths depend on the empirical world. This is usually combined with the claim that the laws of logic express universal regularities found in the structural features of the world and that they can be explored by studying general patterns of the fundamental sciences. For example, it has been argued that certain insights of quantum mechanics refute the principle of distributivity in classical logic, which states that the formula A ∧ ( B ∨ C )  is equivalent to ( A ∧ B ) ∨ ( A ∧ C )

is equivalent to ( A ∧ B ) ∨ ( A ∧ C )  . This claim can be used as an empirical argument for the thesis that quantum logic is the correct logical system and should replace classical logic.[198][199][200]

. This claim can be used as an empirical argument for the thesis that quantum logic is the correct logical system and should replace classical logic.[198][199][200]

History

Main article: History of logic

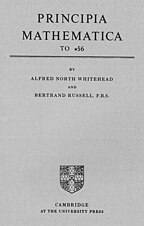

Top row: Aristotle, who established the canon of western philosophy;[112] and Avicenna, who replaced Aristotelian logic in Islamic discourse.[201] Bottom row: William of Ockham, a major figure of medieval scholarly thought;[202] and the Principia Mathematica, which had a large impact on modern logic.

Logic was developed independently in several cultures during antiquity. One major early contributor was Aristotle, who developed term logic in his Organon and Prior Analytics.[203][204][205] Aristotle's system of logic was responsible for the introduction of hypothetical syllogism,[206]temporal modal logic,[207][208] and inductive logic,[209] as well as influential vocabulary such as terms, predicables, syllogisms and propositions. Aristotelian logic was highly regarded in classical and medieval times, both in Europe and the Middle East. It remained in wide use in the West until the early 19th century.[210][112] It has now been superseded by later work, though many of its key insights are still present in modern systems of logic.[211][212]

Ibn Sina (Avicenna) was the founder of Avicennian logic, which replaced Aristotelian logic as the dominant system of logic in the Islamic world.[213][201] It also had a significant influence on Western medieval writers such as Albertus Magnus and William of Ockham.[214][215] Ibn Sina wrote on the hypothetical syllogism[216] and on the propositional calculus.[217] He developed an original "temporally modalized" syllogistic theory, involving temporal logic and modal logic.[218] He also made use of inductive logic, such as his methods of agreement, difference, and concomitant variation, which are critical to the scientific method.[216]Fakhr al-Din al-Razi was another influential Muslim logician. He criticised Aristotlian syllogistics and formulated an early system of inductive logic, foreshadowing the system of inductive logic developed by John Stuart Mill.[219]

During the Middle Ages, many translations and interpretations of Aristotelian logic were made. Of particular influence were the works of Boethius. Besides translating Aristotle's work into Latin, he also produced various text-books on logic.[220][221] Later, the work of Islamic philosophers such as Ibn Sina and Ibn Rushd (Averroes) were drawn on. This expanded the range of ancient works available to medieval Christian scholars since more Greek work was available to Muslim scholars that had been preserved in Latin commentaries. In 1323, William of Ockham's influential Summa Logicae was released. It is a comprehensive treatise on logic that discusses many fundamental concepts of logic and provides a systematic exposition of different types of propositions and their truth conditions.[221][213][222]

In Chinese philosophy, the School of Names and Mohism were particularly influential. The School of Names focused on the use of language and on paradoxes. For example, Gongsun Long proposed the white horse paradox, which defends the thesis that a white horse is not a horse. The school of Mohism also acknowledged the importance of language for logic and tried to relate the ideas in these fields to the realm of ethics.[223][224]

In India, the study of logic was primarily pursued by the schools of Nyaya, Buddhism, and Jainism. It was not treated as a separate academic discipline and discussions of its topics usually happened in the context of epistemology and theories of dialogue or argumentation.[225] In Nyaya, inference is understood as a source of knowledge (pramāṇa). It follows the perception of an object and tries to arrive at certain conclusions, for example, about the cause of this object.[226] A similar emphasis on the relation to epistemology is also found in Buddhist and Jainist schools of logic, where inference is used to expand the knowledge gained through other sources.[227][228] Some of the later theories of Nyaya, belonging to the Navya-Nyāya school, resemble modern forms of logic, such as Gottlob Frege's distinction between sense and reference and his definition of number.[229]

The syllogistic logic developed by Aristotle predominated in the West until the mid-19th century, when interest in the foundations of mathematics stimulated the development of modern symbolic logic.[112][230] It is often argued that Gottlob Frege’s Begriffsschrift gave birth to modern logic. Other pioneers of the field were Gottfried Wilhelm Leibniz, who conceived the idea of a universal formal language, George Boole, who invented Boolean algebra as a mathematical system of logic, and Charles Peirce, who developed the logic of relatives, as well as Alfred North Whitehead and Bertrand Russell, who condensed many of these insights in their work Principia Mathematica. Modern logic introduced various new notions, such as the concepts of functions, quantifiers, and relational predicates. A hallmark of modern symbolic logic is its use of formal language to codify its insights in a very precise manner. This contrasts with the approach of earlier logicians, who relied mainly on natural language.[231] Of particular influence was the development of first-order logic, which is usually treated as the standard system of modern logic.[232]

See also

References

Notes

- However, there are some forms of logic, like imperative logic, where this may not be the case.[47]

Citations

- Pépin, Jean (2004). "Logos". Encyclopedia of Religion. ISBN 978-0-02-865733-2. Archived from the original on 29 December 2021. Retrieved 29 December 2021.

- "logic". etymonline.com. Archived from the original on 29 December 2021. Retrieved 29 December 2021.

- Hintikka, Jaakko J. "Philosophy of logic". Encyclopedia Britannica. Archived from the original on 28 April 2015. Retrieved 21 November 2021.

- Haack, Susan (1978). "1. 'Philosophy of logics' ". Philosophy of Logics. London and New York: Cambridge University Press. pp. 1–10. ISBN 978-0-521-29329-7. Archived from the original on 7 December 2021. Retrieved 29 December 2021.

- Schlesinger, I. M.; Keren-Portnoy, Tamar; Parush, Tamar (1 January 2001). The Structure of Arguments. John Benjamins Publishing. p. 220. ISBN 978-90-272-2359-3.

- Hintikka & Sandu 2006, p. 13.

- Audi, Robert (1999). "Philosophy of logic". The Cambridge Dictionary of Philosophy. Cambridge University Press. ISBN 978-1-107-64379-6. Archived from the original on 14 April 2021. Retrieved 29 December 2021.

- McKeon, Matthew. "Logical Consequence". Internet Encyclopedia of Philosophy. Archived from the original on 12 November 2021. Retrieved 20 November 2021.

- MacFarlane, John (2017). "Logical Constants: 4. Topic neutrality". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 17 March 2020. Retrieved 4 December 2021.

- Corkum, Philip (2015). "Generality and Logical Constancy". Revista Portuguesa de Filosofia. 71 (4): 753–767. doi:10.17990/rpf/2015_71_4_0753. ISSN 0870-5283. JSTOR 43744657.

- Blair, J. Anthony; Johnson, Ralph H. (2000). "Informal Logic: An Overview". Informal Logic. 20 (2): 93–107. doi:10.22329/il.v20i2.2262. Archived from the original on 9 December 2021. Retrieved 29 December 2021.

- Hofweber 2021.

- Gómez-Torrente, Mario (2022). "Alfred Tarski: 3. Logical consequence". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Retrieved 25 September 2022.

- McKeon, Matthew. "Logical Consequence, Model-Theoretic Conceptions". Internet Encyclopedia of Philosophy. Retrieved 25 September 2022.

- Magnus, P. D. (2005). "1.4 Deductive validity". Forall X: An Introduction to Formal Logic. Victoria, BC, Canada: State University of New York Oer Services. pp. 8–9. ISBN 978-1-64176-026-3. Archived from the original on 7 December 2021. Retrieved 29 December 2021.

- Mattingly, James (28 October 2022). The SAGE Encyclopedia of Theory in Science, Technology, Engineering, and Mathematics. SAGE Publications. p. 512. ISBN 978-1-5063-5328-9.

- Craig, Edward (1996). "Formal and informal logic". Routledge Encyclopedia of Philosophy. Routledge. ISBN 978-0-415-07310-3. Archived from the original on 16 January 2021. Retrieved 29 December 2021.

- Hintikka & Sandu 2006, p. 16.

- Gómez-Torrente, Mario (2019). "Logical Truth". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 2 October 2021. Retrieved 22 November 2021.

- Hintikka & Sandu 2006, p. 16-7.

- Jacquette, Dale (2006). "Introduction: Philosophy of logic today". Philosophy of Logic. North Holland. pp. 1–12. ISBN 978-0-444-51541-4. Archived from the original on 7 December 2021. Retrieved 29 December 2021.

- Hintikka & Sandu 2006, p. 31-2.

- Haack, Susan (15 December 1996). Deviant Logic, Fuzzy Logic: Beyond the Formalism. University of Chicago Press. pp. 229–30. ISBN 978-0-226-31133-3.

- Groarke, Leo (2021). "Informal Logic". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 12 January 2022. Retrieved 31 December 2021.

- Audi, Robert (1999). "Informal logic". The Cambridge Dictionary of Philosophy. Cambridge University Press. ISBN 978-1-107-64379-6. Archived from the original on 14 April 2021. Retrieved 29 December 2021.

- Johnson, Ralph H. (1999). "The Relation Between Formal and Informal Logic". Argumentation. 13 (3): 265–274. doi:10.1023/A:1007789101256. S2CID 141283158. Archived from the original on 7 December 2021. Retrieved 2 January 2022.

- van Eemeren, Frans H.; Garssen, Bart; Krabbe, Erik C. W.; Snoeck Henkemans, A. Francisca; Verheij, Bart; Wagemans, Jean H. M. (2021). "Informal Logic". Handbook of Argumentation Theory. Springer Netherlands. pp. 1–45. doi:10.1007/978-94-007-6883-3_7-1. ISBN 978-94-007-6883-3. Archived from the original on 31 December 2021. Retrieved 2 January 2022.

- Honderich, Ted (2005). "logic, informal". The Oxford Companion to Philosophy. Oxford University Press. ISBN 978-0-19-926479-7. Archived from the original on 29 January 2021. Retrieved 2 January 2022.

- Craig, Edward (1996). "Formal languages and systems". Routledge Encyclopedia of Philosophy. Routledge. ISBN 978-0-415-07310-3. Archived from the original on 16 January 2021. Retrieved 29 December 2021.

- Simpson, R. L. (17 March 2008). Essentials of Symbolic Logic - Third Edition. Broadview Press. p. 14. ISBN 978-1-77048-495-5.

- Hintikka & Sandu 2006, p. 22-3.

- Eemeren, Frans H. van; Grootendorst, Rob; Johnson, Ralph H.; Plantin, Christian; Willard, Charles A. (5 November 2013). Fundamentals of Argumentation Theory: A Handbook of Historical Backgrounds and Contemporary Developments. Routledge. p. 169. ISBN 978-1-136-68804-1.

- Walton, Douglas N. (1987). "1. A new model of argument". Informal Fallacies: Towards a Theory of Argument Criticisms. John Benjamins. pp. 1–32. ISBN 978-1-55619-010-0. Archived from the original on 2 March 2022. Retrieved 2 January 2022.

- Engel, S. Morris (1982). "2. The medium of language". With Good Reason an Introduction to Informal Fallacies. pp. 59–92. ISBN 978-0-312-08479-0. Archived from the original on 1 March 2022. Retrieved 2 January 2022.

- Blair, J. Anthony; Johnson, Ralph H. (1987). "The Current State of Informal Logic". Informal Logic. 9 (2): 147–51. doi:10.22329/il.v9i2.2671. Archived from the original on 30 December 2021. Retrieved 2 January 2022.

- Weddle, Perry (26 July 2011). "36. Informal logic and the eductive-inductive distinction". Argumentation 3. De Gruyter Mouton. pp. 383–8. doi:10.1515/9783110867718.383. ISBN 978-3-11-086771-8. Archived from the original on 31 December 2021. Retrieved 2 January 2022.

- Blair, J. Anthony (20 October 2011). Groundwork in the Theory of Argumentation: Selected Papers of J. Anthony Blair. Springer Science & Business Media. p. 47. ISBN 978-94-007-2363-4.

- Hintikka & Sandu 2006, p. 14.

- D'Agostino, Marcello; Floridi, Luciano (2009). "The Enduring Scandal of Deduction: Is Propositional Logic Really Uninformative?". Synthese. 167 (2): 271–315. doi:10.1007/s11229-008-9409-4. hdl:2299/2995. ISSN 0039-7857. JSTOR 40271192. S2CID 9602882.

- Backmann, Marius (1 June 2019). "Varieties of Justification—How (Not) to Solve the Problem of Induction". Acta Analytica. 34 (2): 235–255. doi:10.1007/s12136-018-0371-6. ISSN 1874-6349. S2CID 125767384.

- "Deductive and Inductive Arguments". Internet Encyclopedia of Philosophy. Archived from the original on 28 May 2010. Retrieved 4 December 2021.

- Vleet, Van Jacob E. (2010). "Introduction". Informal Logical Fallacies: A Brief Guide. Upa. pp. ix–x. ISBN 978-0-7618-5432-6. Archived from the original on 28 February 2022. Retrieved 2 January 2022.

- Dowden, Bradley. "Fallacies". Internet Encyclopedia of Philosophy. Archived from the original on 29 April 2010. Retrieved 19 March 2021.

- Stump, David J. "Fallacy, Logical". encyclopedia.com. Archived from the original on 15 February 2021. Retrieved 20 March 2021.

- Maltby, John; Day, Liz; Macaskill, Ann (2007). Personality, Individual Differences and Intelligence. Prentice Hall. ISBN 978-0-13-129760-9.

- Honderich, Ted (2005). "philosophical logic". The Oxford Companion to Philosophy. Oxford University Press. ISBN 978-0-19-926479-7. Archived from the original on 29 January 2021. Retrieved 2 January 2022.

- Haack, Susan (1974). Deviant Logic: Some Philosophical Issues. CUP Archive. p. 51. ISBN 978-0-521-20500-9.

- Falguera, José L.; Martínez-Vidal, Concha; Rosen, Gideon (2021). "Abstract Objects". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 22 January 2021. Retrieved 7 January 2022.

- Tondl, L. (6 December 2012). Problems of Semantics: A Contribution to the Analysis of the Language Science. Springer Science & Business Media. p. 111. ISBN 978-94-009-8364-9.

- Pietroski, Paul (2021). "Logical Form: 1. Patterns of Reason". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 2 October 2021. Retrieved 4 December 2021.

- Kusch, Martin (2020). "Psychologism". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 29 December 2020. Retrieved 30 November 2021.

- Rush, Penelope (2014). "Introduction". The Metaphysics of Logic. Cambridge University Press. pp. 1–10. ISBN 978-1-107-03964-3. Archived from the original on 7 December 2021. Retrieved 8 January 2022.

- King, Jeffrey C. (2019). "Structured Propositions". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 25 October 2021. Retrieved 4 December 2021.

- Pickel, Bryan (1 July 2020). "Structured propositions and trivial composition". Synthese. 197 (7): 2991–3006. doi:10.1007/s11229-018-1853-1. ISSN 1573-0964. S2CID 49729020.

- Craig, Edward (1996). "Philosophy of logic". Routledge Encyclopedia of Philosophy. Routledge. ISBN 978-0-415-07310-3. Archived from the original on 16 January 2021. Retrieved 29 December 2021.

- Michaelson, Eliot; Reimer, Marga (2019). "Reference". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 7 December 2021. Retrieved 4 December 2021.

- MacFarlane, John (2017). "Logical Constants". The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 17 March 2020. Retrieved 21 November 2021.

- Jago, Mark (24 April 2014). The Impossible: An Essay on Hyperintensionality. OUP Oxford. p. 41. ISBN 978-0-19-101915-9.

- Magnus, P. D. (2005). "3. Truth tables". Forall X: An Introduction to Formal Logic. Victoria, BC, Canada: State University of New York Oer Services. pp. 35–45. ISBN 978-1-64176-026-3. Archived from the original on 7 December 2021. Retrieved 29 December 2021.

- Angell, Richard B. (1964). Reasoning and Logic. Ardent Media. p. 164. ISBN 978-0-89197-375-1.

- Tarski, Alfred (6 January 1994). Introduction to Logic and to the Methodology of the Deductive Sciences. Oxford University Press. p. 40. ISBN 978-0-19-802139-1.